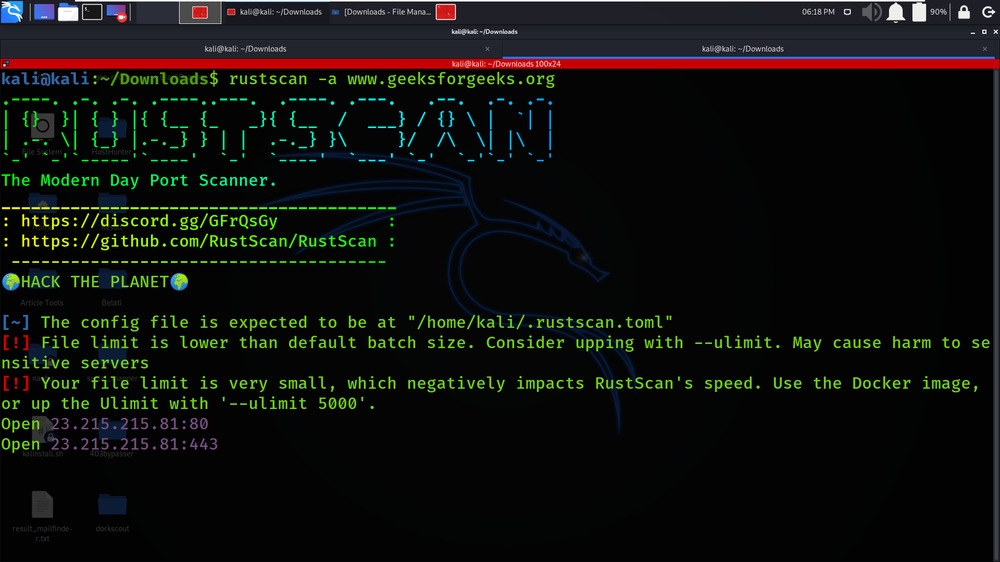

A couple of weeks ago hidden prompt injections were discovered and we covered it at the time.

This video explains it in more detail, and also highlights implications beyond hiding instructions, including what I call ASCII Smuggling. This is the usage of Unicode Tags Block characters to both craft and deciper hidden messages in plain sight.

Using Unicode encoding to bypass security features or execute code (XSS, SSRF,..) has been in use for a while, however this new TTP enables more sophisticated attack scenarios.

This is because Unicode Tags Code Point mirror the entire ASCII set, but are often not visible in UI elements. For Large Language Models this is interesting because LLMs often interpret this hidden text as ASCII, and they can also craft such hidden text when replying to user queries.

Take-aways

Couple of things come to mind and I touch on those in the video in more detail:

- Test your own LLM apps for this new attack vector

- As developer a possible mitigations is to remove Unicode Tags Block text on the way in and out

- Consider implications beyond LLM applications and Chatbots